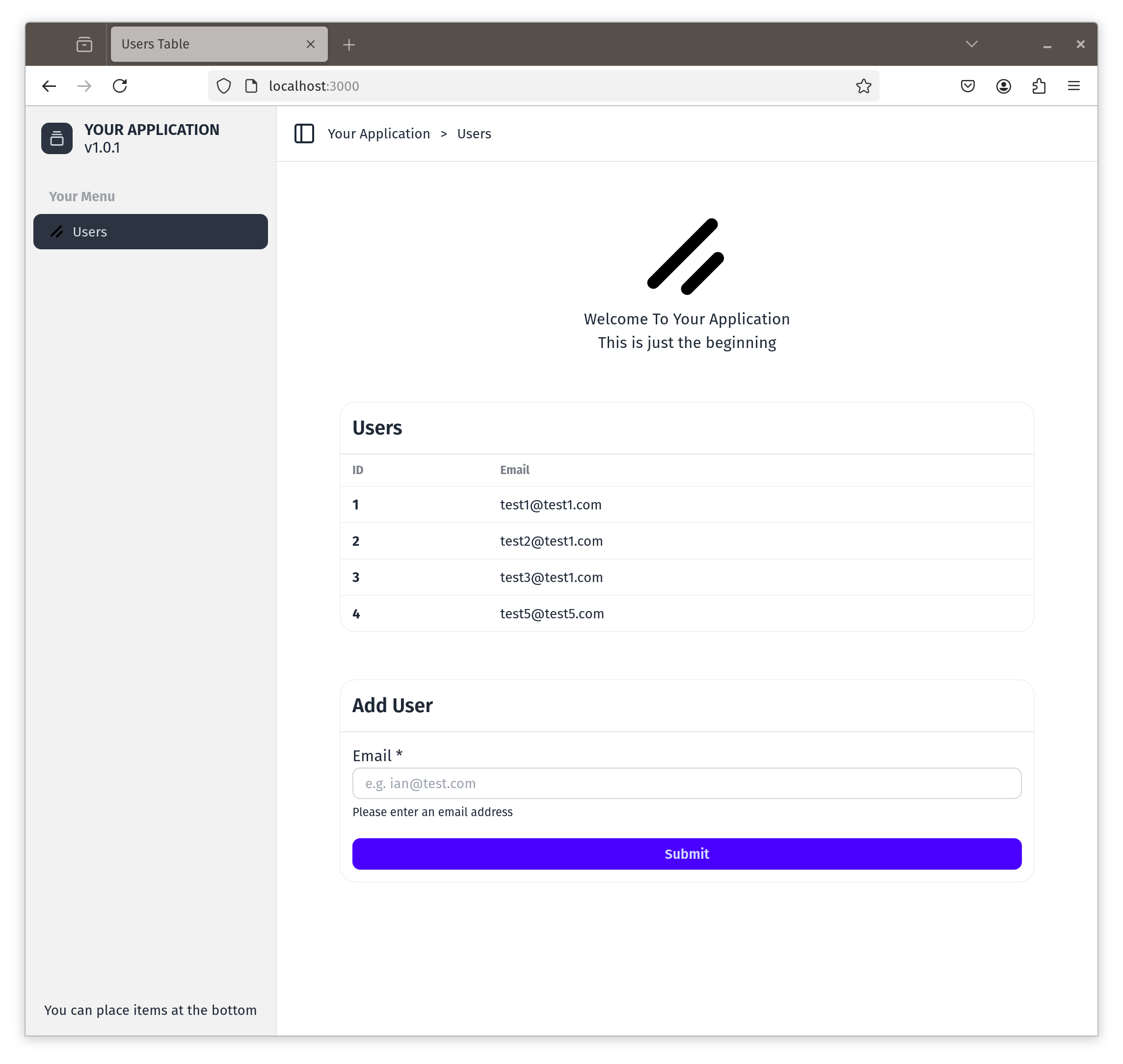

SSR gives you a low code simple way to build applications and we gave you several ways to add interactivity when needed.

Auto generate Rust functions from SQL definitions

React like components to buold your UI.

Axum for high performance routing

Find emails

Autosync tools

Scale outreach